一、Spring AI介绍

Spring AI 是一个应用框架,旨在将人工智能功能无缝集成到应用程序中。它利用了Spring生态系统的熟悉模式和原则,注重模块化和易用性,不仅适用于经验丰富的AI开发者,也适用于AI领域的新手。

Spring AI的主要特点包括:

- 可移植的AI模型API:Spring AI提供了一个统一的API,支持多种AI模型提供商,如OpenAI、Google、Amazon和Microsoft。这使得在不同的AI技术之间切换,而无需显著更改底层应用程序代码成为可能。

- 支持多种AI服务:平台支持从聊天机器人和图像生成到嵌入模型的多种服务,根据项目需求提供灵活性。

- ETL框架和向量数据库:为了增强数据工程实践,Spring AI包括了一个ETL(提取、转换、加载)框架,帮助准备AI模型的数据。它还支持各种向量数据库,以有效存储和管理转换后的数据。

- 函数调用和自动配置:开发者可以在AI模型交互中直接使用Java函数,简化代码并减少开销。此外,Spring AI与Spring Boot集成,实现自动配置,使得设置和运行AI应用更加容易。

- 社区和文档:Spring AI拥有广泛的文档和开发者社区,为学习和故障排除提供了丰富的资源。

通过强调易用性、模块化和集成能力,Spring AI旨在使AI应用开发普及化,适用于不同的编程环境,是需要AI功能的项目的强大选择。

二、项目创建

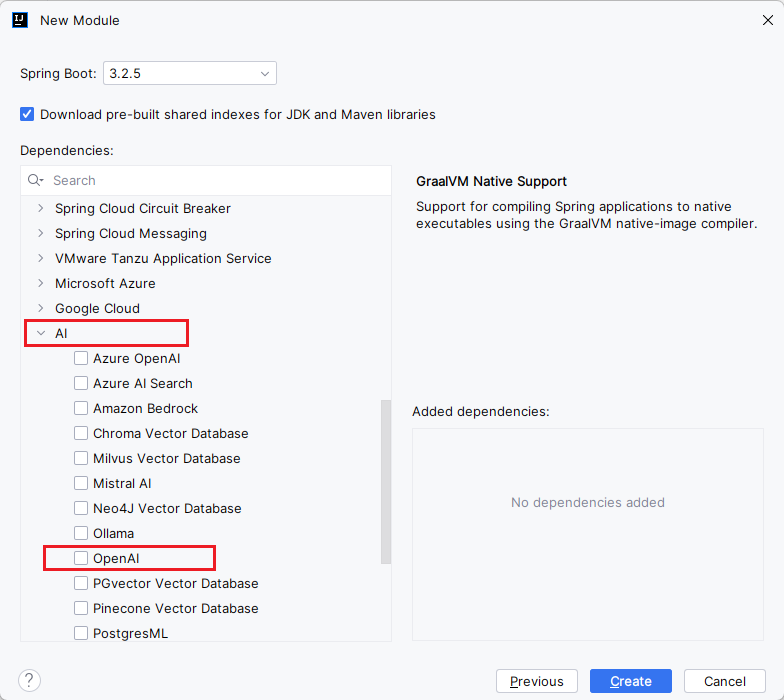

在SpringBoot高版本中已经可以直接选择相关的AI模块组:

使用IDEA初始化:目前IDEA已经在SpringBoot创建器中添加了相关SpringAI的内容,我们可以直接进行选择构建。

构建完成我们看看该项目的pom.xml文件:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.2.5</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.example</groupId>

<artifactId>spring-OpenAI-chat</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>spring-OpenAI-chat</name>

<description>spring-OpenAI-chat</description>

<properties>

<java.version>17</java.version>

<spring-ai.version>0.8.1</spring-ai.version>

</properties>

<dependencies>

<!--Spring Web 依赖包-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<!--Spring AI 依赖包-->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-openai-spring-boot-starter</artifactId>

</dependency>

<!--Spring 热部署 依赖包-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<scope>runtime</scope>

<optional>true</optional>

</dependency>

<!--Lombok 依赖包-->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<!--Spring 测试 依赖包-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

<dependencyManagement>

<dependencies>

<!--Spring AI版本管理-->

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>${spring-ai.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<configuration>

<excludes>

<exclude>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

</exclude>

</excludes>

</configuration>

</plugin>

</plugins>

</build>

<repositories>

<repository>

<id>spring-milestones</id>

<name>Spring Milestones</name>

<url>https://repo.spring.io/milestone</url>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

</project>三、OpenAI API Key

①Magic Shell API

好处:API 均免费 注册送66$

缺点:可能活不长

链接:https://api.freegpt.art/register/?aff_code=WXpt

接口地址:https://api.freegpt.art

②burn.hair

好处:可以每天签到领取额度

坏处:量大不行,学习还是可以的

注册链接:https://burn.hair/register?aff=LcMy

OpenAI 接口转发站:

接口地址换成 https://burn.hair 或 https://burn.hair/v1

③GPT_API_free

好处:免费的Gpt-3.5 API

缺点:没有Gpt-4 API

注册链接:https://github.com/chatanywhere/GPT_API_free

通过GPT-API-free申请免费的API Key:

④SMNET AI API

好处:注册送500$

缺点:无GPT -4

链接:https://api.smnet1.asia/register?aff=IsMi

接口地址:https://api.smnet1.asia

四、Spring AI API

Chat Properties

Retry Properties

The prefix spring.ai.retry is used as the property prefix that lets you configure the retry mechanism for the OpenAI Chat client.

| Property | Description | Default |

|---|---|---|

| spring.ai.retry.max-attempts | Maximum number of retry attempts. | 10 |

| spring.ai.retry.backoff.initial-interval | Initial sleep duration for the exponential backoff policy. | 2 sec. |

| spring.ai.retry.backoff.multiplier | Backoff interval multiplier. | 5 |

| spring.ai.retry.backoff.max-interval | Maximum backoff duration. | 3 min. |

| spring.ai.retry.on-client-errors | If false, throw a NonTransientAiException, and do not attempt retry for 4xx client error codes | false |

| spring.ai.retry.exclude-on-http-codes | List of HTTP status codes that should not trigger a retry (e.g. to throw NonTransientAiException). | empty |

Connection Properties

The prefix spring.ai.openai is used as the property prefix that lets you connect to OpenAI.

| Property | Description | Default |

|---|---|---|

| spring.ai.openai.base-url | The URL to connect to | api.openai.com |

| spring.ai.openai.api-key | The API Key | - |

Configuration Properties

The prefix spring.ai.openai.chat is the property prefix that lets you configure the chat client implementation for OpenAI.

| Property | Description | Default |

|---|---|---|

| spring.ai.openai.chat.enabled | Enable OpenAI chat client. | true |

| spring.ai.openai.chat.base-url | Optional overrides the spring.ai.openai.base-url to provide chat specific url | - |

| spring.ai.openai.chat.api-key | Optional overrides the spring.ai.openai.api-key to provide chat specific api-key | - |

| spring.ai.openai.chat.options.model | This is the OpenAI Chat model to use | gpt-3.5-turbo (the gpt-3.5-turbo, gpt-4, and gpt-4-32k point to the latest model versions) |

| spring.ai.openai.chat.options.temperature | The sampling temperature to use that controls the apparent creativity of generated completions. Higher values will make output more random while lower values will make results more focused and deterministic. It is not recommended to modify temperature and top_p for the same completions request as the interaction of these two settings is difficult to predict. | 0.8 |

| spring.ai.openai.chat.options.frequencyPenalty | Number between -2.0 and 2.0. Positive values penalize new tokens based on their existing frequency in the text so far, decreasing the model’s likelihood to repeat the same line verbatim. | 0.0f |

| spring.ai.openai.chat.options.logitBias | Modify the likelihood of specified tokens appearing in the completion. | - |

| spring.ai.openai.chat.options.maxTokens | The maximum number of tokens to generate in the chat completion. The total length of input tokens and generated tokens is limited by the model’s context length. | - |

| spring.ai.openai.chat.options.n | How many chat completion choices to generate for each input message. Note that you will be charged based on the number of generated tokens across all of the choices. Keep n as 1 to minimize costs. | 1 |

| spring.ai.openai.chat.options.presencePenalty | Number between -2.0 and 2.0. Positive values penalize new tokens based on whether they appear in the text so far, increasing the model’s likelihood to talk about new topics. | - |

| spring.ai.openai.chat.options.responseFormat | An object specifying the format that the model must output. Setting to { "type": "json_object" } enables JSON mode, which guarantees the message the model generates is valid JSON. | - |

| spring.ai.openai.chat.options.seed | This feature is in Beta. If specified, our system will make a best effort to sample deterministically, such that repeated requests with the same seed and parameters should return the same result. | - |

| spring.ai.openai.chat.options.stop | Up to 4 sequences where the API will stop generating further tokens. | - |

| spring.ai.openai.chat.options.topP | An alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass. So 0.1 means only the tokens comprising the top 10% probability mass are considered. We generally recommend altering this or temperature but not both. | - |

| spring.ai.openai.chat.options.tools | A list of tools the model may call. Currently, only functions are supported as a tool. Use this to provide a list of functions the model may generate JSON inputs for. | - |

| spring.ai.openai.chat.options.toolChoice | Controls which (if any) function is called by the model. none means the model will not call a function and instead generates a message. auto means the model can pick between generating a message or calling a function. Specifying a particular function via {"type: "function", "function": {"name": "my_function"}} forces the model to call that function. none is the default when no functions are present. auto is the default if functions are present. | - |

| spring.ai.openai.chat.options.user | A unique identifier representing your end-user, which can help OpenAI to monitor and detect abuse. | - |

| spring.ai.openai.chat.options.functions | List of functions, identified by their names, to enable for function calling in a single prompt requests. Functions with those names must exist in the functionCallbacks registry. |

五、Spring AI使用

根据上述四中描述的配置信息,配置application.yml文件:

spring:

application:

name: spring-OpenAI-chat

ai:

openai:

api-key: sk-bxEM0IB7YATsECi15a5dD249Dd1947FeBe45A2A59bF44d

chat:

options:

model: gpt-4-o

base-url: https://api.freegpt.art该api-key是通过上述三①进行注册获得的API-KEY:

进入网站点击令牌,复制令牌即为API-KEY:

①聊天

1.1 程序

@Resource

private OpenAiChatClient openAiChatClient;

/**

* 与OpenAI聊天客户端进行交互的接口方法

*

* @param msg 发送给OpenAI聊天客户端的消息

* @return OpenAI聊天客户端返回的消息

*/

@RequestMapping("/chat")

public String chat(@RequestParam(value = "msg")String msg){

// 调用openAiChatClient的call方法,传入发送的消息msg

String called = openAiChatClient.call(msg);

// 返回OpenAI聊天客户端返回的消息

return called;

}

/**

* 与OpenAI聊天客户端进行交互的接口方法

*

* @param msg 发送给OpenAI聊天客户端的消息

* @return ChatResponse对象,包含OpenAI聊天客户端返回的消息内容

*/

@RequestMapping("/chat2")

public Object chat2(@RequestParam(value = "msg")String msg){

// 调用openAiChatClient的call方法,传入一个新的Prompt对象,该对象以传入的msg为参数

ChatResponse chatResponse = openAiChatClient.call(new Prompt(msg));

// 返回ChatResponse对象,该对象包含OpenAI聊天客户端返回的消息内容

return chatResponse;

}1.2 OpenAiChatClient类

OpenAiChatClient 是一个接口,它在 Spring AI 库中定义,用于与 OpenAI 进行交互。它的主要职责是发送消息到 OpenAI 并接收返回的消息。在这个项目中,它被用于处理与 OpenAI 的聊天功能。

在 Spring 框架中,@Resource 注解用于自动装配。它可以应用在字段、setter 方法和配置方法上。在这个情况下,@Resource 注解被应用在 OpenAiChatClient 类型的字段上,这意味着 Spring 将自动注入一个 OpenAiChatClient 的实例。

该类最重要的一个方法就是这个call方法:

public ChatResponse call(Prompt prompt) {

OpenAiApi.ChatCompletionRequest request = this.createRequest(prompt, false);

return (ChatResponse)this.retryTemplate.execute((ctx) -> {

ResponseEntity<OpenAiApi.ChatCompletion> completionEntity = (ResponseEntity)this.callWithFunctionSupport(request);

OpenAiApi.ChatCompletion chatCompletion = (OpenAiApi.ChatCompletion)completionEntity.getBody();

if (chatCompletion == null) {

logger.warn("No chat completion returned for prompt: {}", prompt);

return new ChatResponse(List.of());

} else {

RateLimit rateLimits = OpenAiResponseHeaderExtractor.extractAiResponseHeaders(completionEntity);

List<Generation> generations = chatCompletion.choices().stream().map((choice) -> {

return (new Generation(choice.message().content(), this.toMap(chatCompletion.id(), choice))).withGenerationMetadata(ChatGenerationMetadata.from(choice.finishReason().name(), (Object)null));

}).toList();

return new ChatResponse(generations, OpenAiChatResponseMetadata.from((OpenAiApi.ChatCompletion)completionEntity.getBody()).withRateLimit(rateLimits));

}

});

}

default String call(String message) {

Prompt prompt = new Prompt(new UserMessage(message));

Generation generation = this.call(prompt).getResult();

return generation != null ? generation.getOutput().getContent() : "";

}call方法是OpenAiChatClient类中的一个公共方法,它接收一个Prompt类型的参数,并返回一个ChatResponse类型的对象。

这个方法的主要步骤如下:

- 首先,它使用传入的

Prompt对象创建一个OpenAiApi.ChatCompletionRequest对象。这个请求对象将被用于与OpenAI API进行交互。 - 然后,它使用

retryTemplate对象执行一个Lambda表达式。retryTemplate是Spring框架提供的一个工具,它可以帮助我们在出现错误时自动重试操作。 - 在这个Lambda表达式中,它首先调用

callWithFunctionSupport方法,将刚刚创建的请求对象传入,从OpenAI API获取一个响应。 - 接着,它从响应中获取

OpenAiApi.ChatCompletion对象。如果这个对象为null,它将记录一条警告,并返回一个空的ChatResponse对象。 - 如果

OpenAiApi.ChatCompletion对象不为null,它将从响应头中提取RateLimit信息,然后从OpenAiApi.ChatCompletion对象中获取choices列表。 - 对于

choices列表中的每一个元素,它将创建一个Generation对象,并将这个对象的内容设置为choice的消息内容,将这个对象的元数据设置为choice的完成原因。 - 最后,它将创建一个新的

ChatResponse对象,将刚刚创建的Generation列表和RateLimit信息作为参数传入,并返回这个ChatResponse对象。

这个方法的主要作用是,根据传入的Prompt对象,与OpenAI API进行交互,并返回一个包含了交互结果的ChatResponse对象。

1.3 运行结果

1.4 预期结果

这是本科当时做的一个博客,博客采用了DaoVoice,这个在线聊天窗口程序。目前设想,到时候看看自己的系统能不能集成某个可以在线聊天的程序吧。

②图像

2.1 程序

@Resource

private OpenAiImageClient imageClient;

@RequestMapping("/image")

public Object image(@RequestParam("msg") String msg){

ImageResponse imageResponse = imageClient.call(new ImagePrompt(msg));

System.out.println(imageResponse);

return new ResponseEntity<>(imageResponse.getResult().getOutput(), HttpStatus.OK);

}这个得换成三中的②的API Key。

2.2 运行结果

评论已关闭